Overall Value

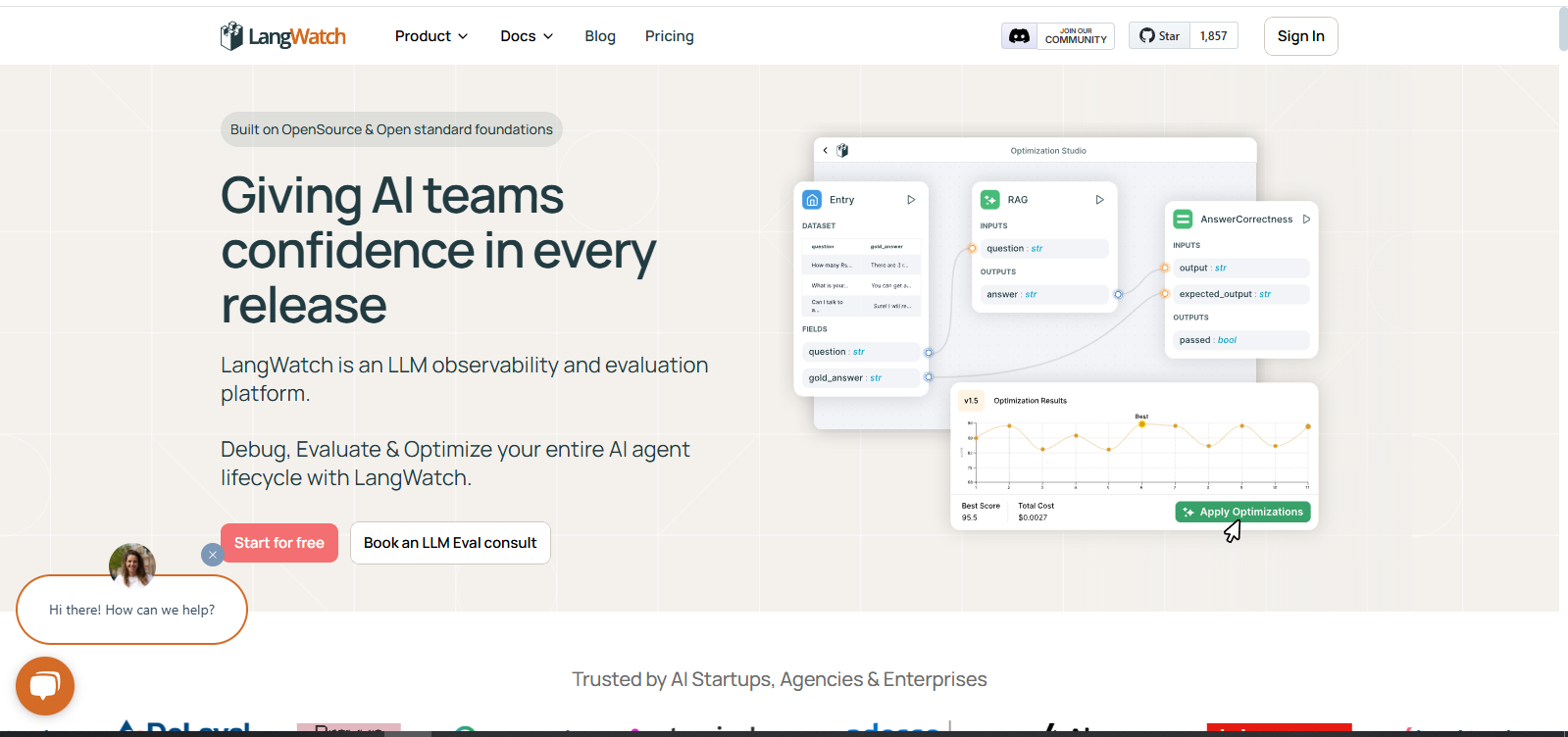

LangWatch is the go-to platform for AI teams, researchers, and startups looking to streamline debugging and improve LLM output quality. From real-time token tracing to model evaluation workflows, LangWatch brings clarity, speed, and structure to your AI builds.

Features

- Full-Stack Trace View: Inspect every interaction—prompts, variables, retries, and responses across agents and frameworks.

- Live Cost & Latency Insights: Track API usage, latency spikes, and token spend—instantly.

- Root Cause Finder: Pinpoint failures with contextual breadcrumbs and prompt snapshots.

- Prompt Playground: A no-code, test-and-tune interface for iterating on LLM inputs.

- Quality Check Automator: Set rules to auto-evaluate accuracy, tone, hallucinations, and prompt fit.

- Smart Monitoring Dashboards: Visualize metrics and trigger alerts when anomalies appear.

- Feedback Loops with Teams: Collaborate on debugging and use real-world inputs to improve models.

- Agent Performance Reports: Share-ready visuals for stakeholders and product teams.

Use Cases

- 🛠️ Debugging prompt engineering failures before users see them

- 📊 Analyzing cost-performance trade-offs across LLM models

- 🔁 Creating scalable evaluation pipelines for QA teams

- 🤝 Collaborating with domain experts to fine-tune agent behavior

- 🔍 Detecting and preventing model hallucinations or inaccuracies

- 🧪 Experimenting with prompting techniques—Chain-of-Thought, ReAct, and more

Tech Specs

- Platform: Web app, no-code UI + code-friendly integrations

- File Support: JSON logs, CSV exports, eval results

- LLM Compatibility: OpenAI, Claude, Azure, Hugging Face, Groq & more

- Frameworks Supported: LangChain, DSPy, LiteLLM, Vercel AI SDK

- Deployment: Cloud, Self-Hosted, or Hybrid

- API: Available for full custom model and workflow integrations

- Security: GDPR, ISO27001, Role-based Access Control

- Pricing: Free plan available; Paid plans scale with usage

👉 Try for free or scale as you grow with enterprise-ready features.

FAQs

Yes! It works with most modern AI frameworks and LLM APIs. No need to change your stack—just plug and go.

Absolutely. The visual interface makes it easy for PMs, analysts, and domain experts to contribute without code.

LangWatch is purpose-built for LLM applications, with a deep understanding of prompts, agents, retries, and AI behavior.

No retraining required. LangWatch works on top of your existing workflows and helps improve model interactions, not weights.

Yes, it offers hybrid deployment, role controls, and meets top compliance standards like GDPR and ISO27001.

Conclusion

LangWatch isn’t just a debugging tool—it’s your AI team’s co-pilot. From tracking down bugs to boosting LLM performance and ensuring your agents behave as expected, LangWatch simplifies complex AI workflows. Whether you’re an AI researcher, product manager, or engineer, this tool helps you move fast, without breaking things.