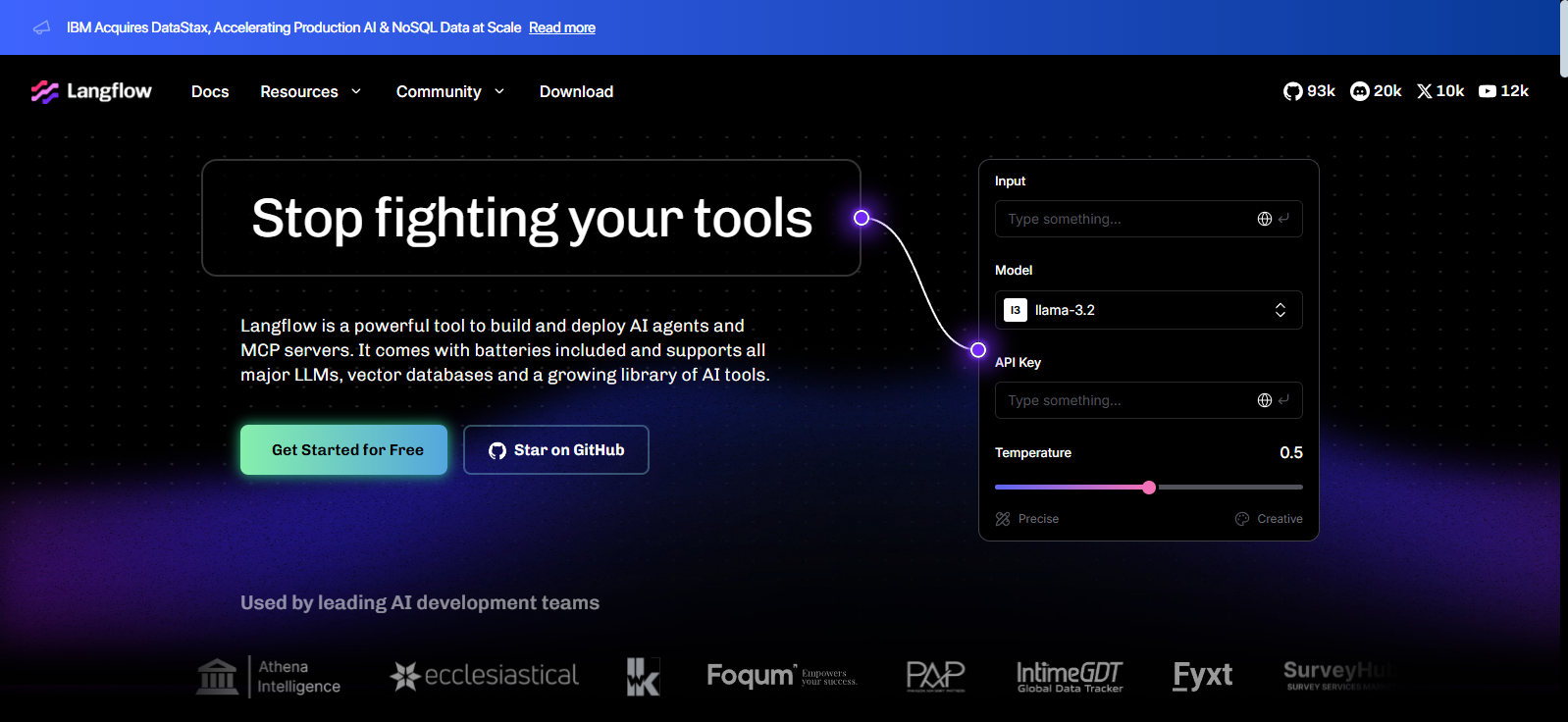

Overall Value

Langflow cuts development time dramatically by letting you visually map, test, and run intelligent agents. Whether you’re building customer support bots, automation agents, or full-stack LLM-powered tools, Langflow turns your Python logic and AI stack into living, scalable apps—ready to share, test, and deploy.

Portkey Product Review

Key Features

🧩 Visual Flow Builder

Design your AI workflows with drag-and-drop components—skip boilerplate code and focus on function.

📦 Modular Agent Components

Use pre-built tools or create custom Python components to extend your agents and integrate APIs, databases, or external logic.

🔄 Multi-LLM Support

Connect with OpenAI, LLaMA, Claude, or your own hosted models—swap between them without reconfiguring your entire app.

🧠 Run Multiple Agents Simultaneously

Launch single or multiple agents in parallel, each powered by its own logic and tools.

⚙️ Flow-as-API Deployment

Turn your flows into API endpoints with just a few clicks—ideal for integration into any stack.

🚀 One-Click Cloud Deployment

Launch your project to a secure, enterprise-grade cloud without managing infrastructure.

🔍 Real-Time Testing & Iteration

Test as you build—adjust parameters like temperature, output length, and model type with live previews.

🔗 Toolchain Friendly

Integrates easily with vector databases, custom backends, and third-party services—use it as a central brain for your agent architecture.

Use Cases

- 🧠 AI Product Teams: Rapidly prototype and deploy custom workflows for your app features.

- 🗣️ Conversational AI Developers: Build dynamic, multi-turn agents using reusable flows.

- 🛠️ LLM Engineers: Swap models, add memory, and control inference without rewriting code.

- 📡 Backend Engineers: Orchestrate data, APIs, and AI logic into scalable agent pipelines.

- 🎓 Researchers & Educators: Teach AI workflow design through interactive visual projects.

Technical Specs

- Interface: Web-based, local or cloud-hosted

- Language Support: Python customization, JSON input/output, prompt tuning

- Model Compatibility: OpenAI, Anthropic, LLaMA, Hugging Face, custom models

- Deployment: Self-hosted or deploy to Langflow’s cloud (enterprise-grade)

- Integrations: Vector DBs, APIs, Notebooks, RAG frameworks

- License: Open-source with enterprise options

Start building smarter AI agents

FAQs

Not necessarily. You can build with drag-and-drop blocks, but having Python knowledge helps you extend custom components.

Yes! Langflow supports 1600+ models, and lets you connect custom LLMs using unified APIs.

Langflow gives you both no-code visual editing and deep Python control, making it ideal for fast iteration and advanced customization.

Absolutely. You can self-host Langflow or use their cloud platform for instant deployment.

Yes. Langflow was built with agent orchestration in mind—run multiple agents and manage their tools within one interface.

Conclusion

Langflow is your bridge from concept to deployment—combining visual design, smart integrations, and open-source flexibility to accelerate AI agent development. Whether you’re a solo builder or a large dev team, it empowers you to go from prototype to production—fast.