Overall Value

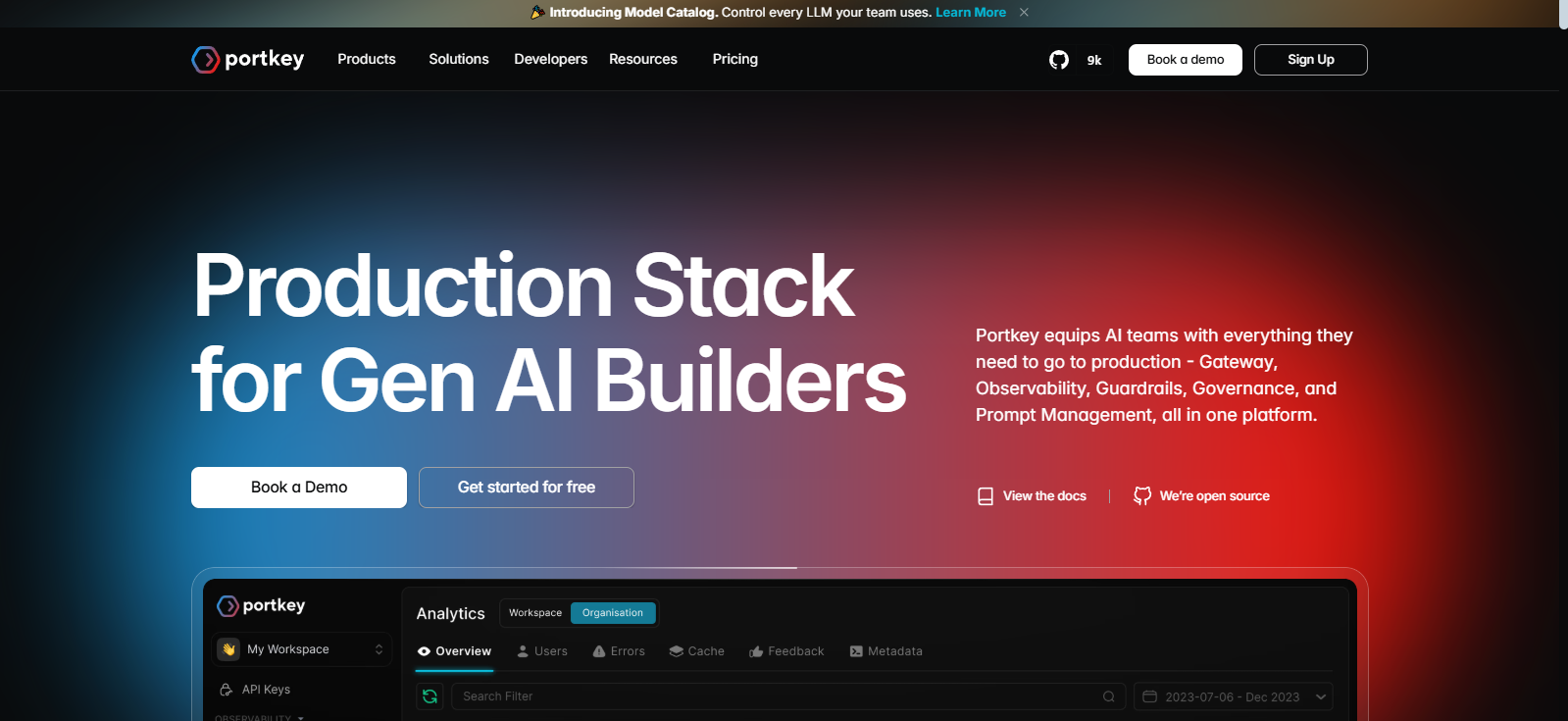

Portkey brings developer control, visibility, and efficiency to the heart of your AI stack. It unifies prompt engineering, caching, logging, governance, and security into a seamless experience. With support for 1,600+ models, Portkey is perfect for startups and enterprise teams looking to go from prototype to production—fast, secure, and scalable.

Portkey Product Review

Key Features

✅ Unified LLM Gateway to access 1,600+ models via a single API

📊 Real-time Observability with token logs, cost metrics, and latency tracing

🛡️ Security Guardrails like PII redaction, token budgeting, and prompt validation

🧠 Prompt Studio for versioning, A/B testing, and multi-model prompt development

⚙️ MCP Client for low-latency tool calling and AI agent orchestration

🔐 Enterprise-Ready Controls including RBAC, audit logs, and single sign-on

⚡ Smart Caching Engine to reduce duplicate requests and cut LLM costs

🌐 Instant Integration with Python, Node.js, cURL, and other popular stacks

Use Cases

🚀 AI teams deploying multi-agent workflows with external tool usage

👨💻 Developers managing prompt-heavy applications across multiple LLMs

💼 Enterprises enforcing data security and governance across AI teams

💡 Product teams building GenAI-powered features with real-time feedback

📉 Engineers tracking and reducing LLM costs with token-level analytics

🧪 Prompt engineers needing testing and experimentation at scale

Technical Specs

Platform: Web-based with CLI and SDK integration

Supported Models: OpenAI, Claude, Cohere, Groq, Mistral, and 1,600+ LLMs

Security: SOC 2, HIPAA, GDPR compliant

Governance: RBAC, Single Sign-On, team-level resource control

Pricing: Free plan available, premium tiers for full-scale governance

Access: No infrastructure change needed; plug-and-play setup

Take full control of your AI pipeline

FAQs

Yes, Portkey supports 1,600+ models through one API, so you’re never locked in.

Through caching, usage tracking, and budget alerts, Portkey prevents overspending on repeated or inefficient queries.

Not at all—just three lines of code are enough to integrate it into your existing stack.

Yes, it scales beautifully from indie devs to enterprise-grade infrastructures.

Absolutely—Portkey is optimized for multi-agent orchestration with observability and tooling support built in.

Conclusion

Portkey isn’t just a gateway—it’s the mission control for GenAI in production. With deep integrations, real-time observability, and smart governance tools, Portkey removes the friction from AI development. It’s the fastest way to go from sandbox to scalable, secure deployments—without the chaos.

💡 Power up your LLM stack the smart way—with Portkey.